bagging in machine learning geeksforgeeks

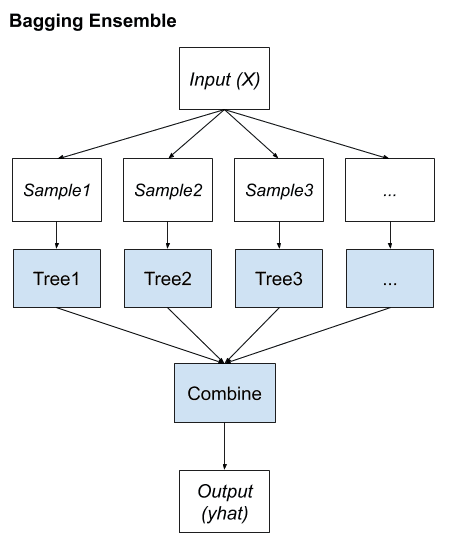

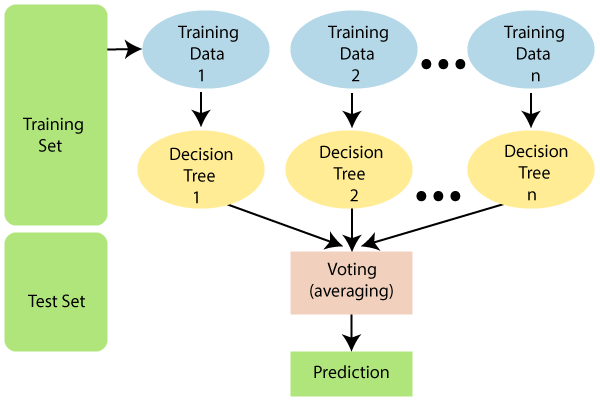

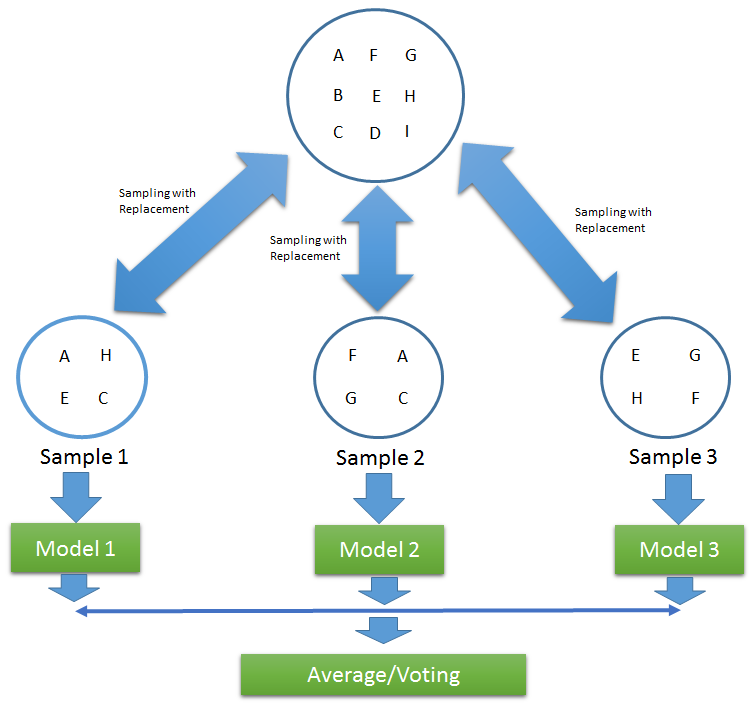

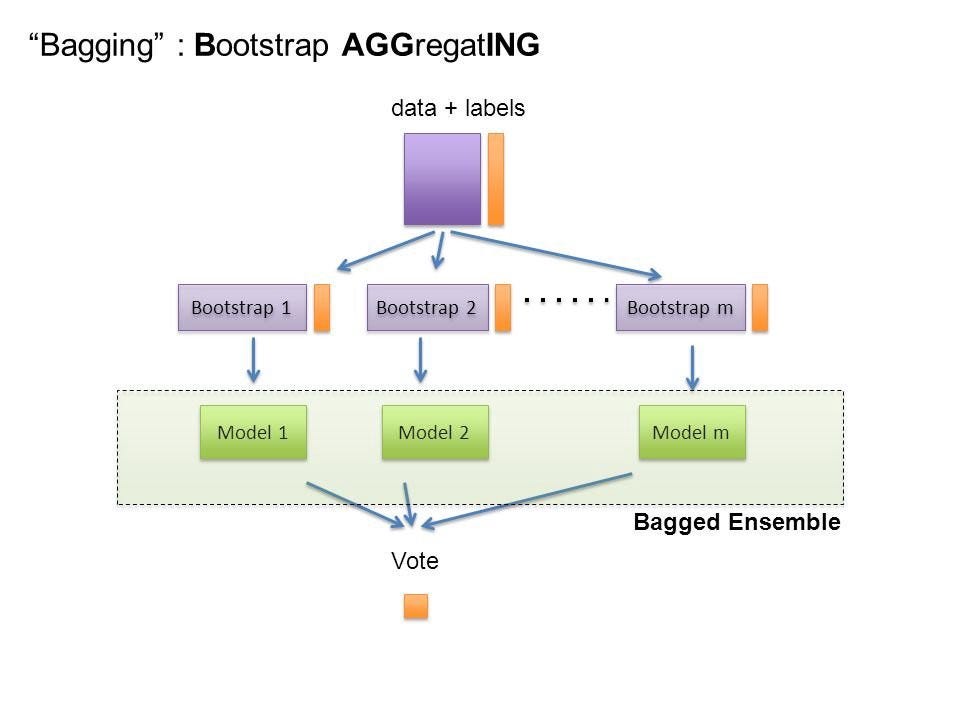

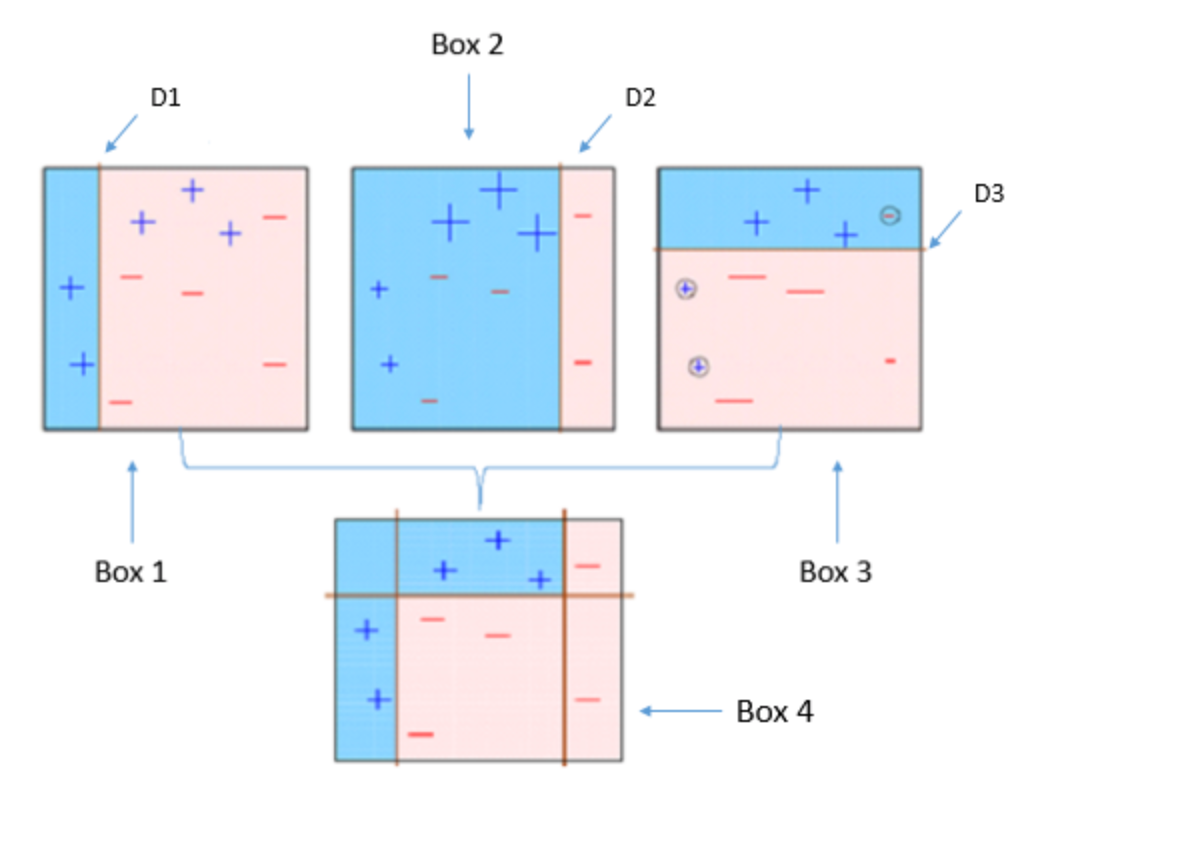

Here the concept is to create a few subsets of data from the training sample which is chosen randomly with replacement. Bagging is the first and simplest meta-model which even though not frequently used these days is essential to serve as the basis to make the development of subsequent ensembles like.

A Gentle Introduction To Ensemble Learning Algorithms

Poor Quality of Data.

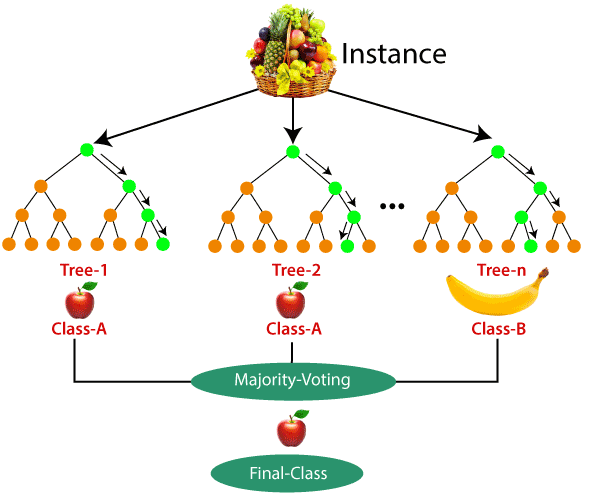

. Predict class of instance using classifier. Return class that was predicted most often. Machine Learning is an essential skill for any aspiring data analyst and data scientist and also for those who wish to transform a massive amount of raw data into trends.

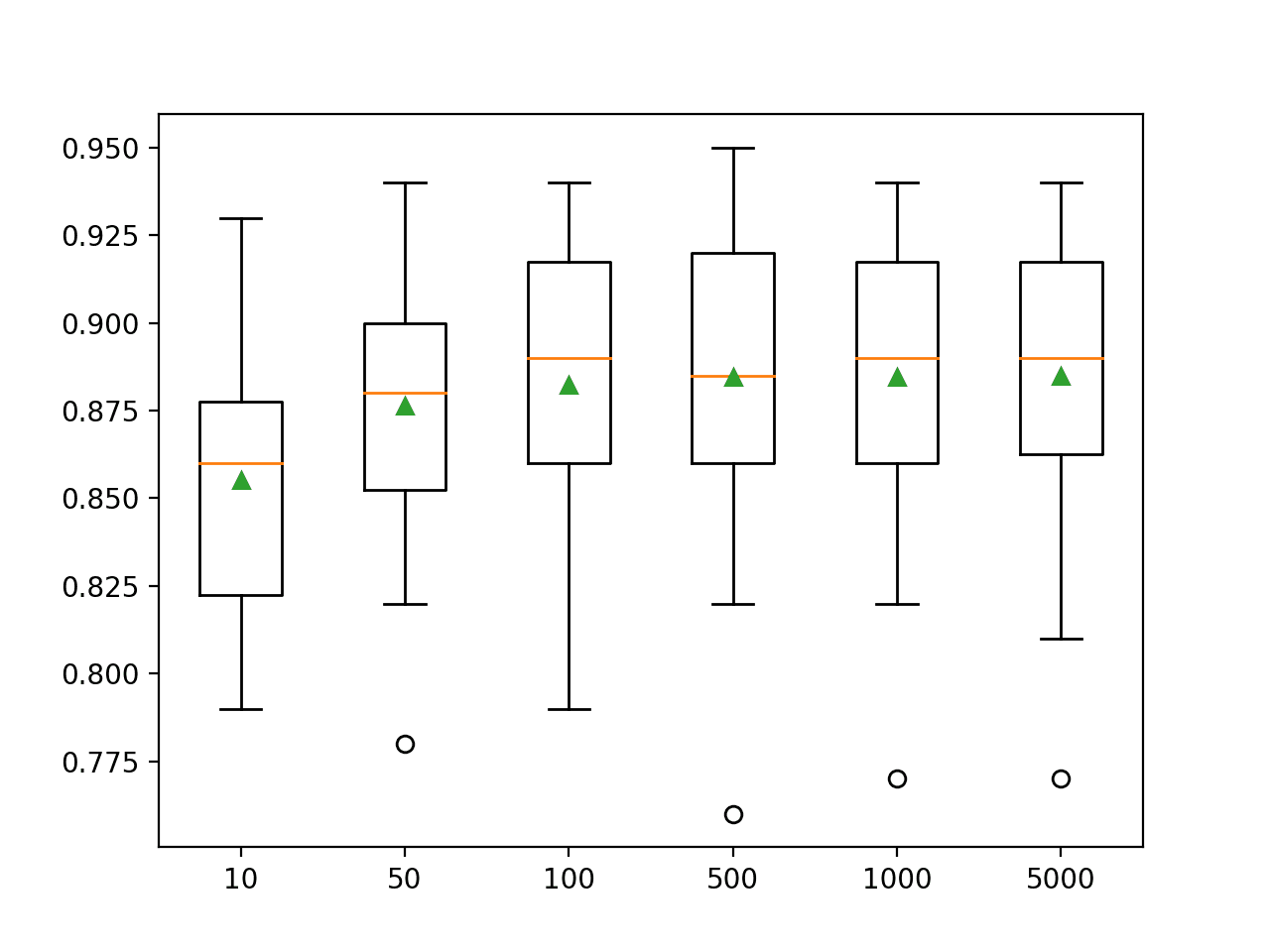

Bagging can be used with any machine learning algorithm but its particularly useful for decision trees because they inherently have high variance and bagging is able to. As highlighted in this study PDF 248 KB link resides outside IBM the main difference between. Now each collection of subset data is used to prepare their decision trees thus we end up with an ensemble of various models.

Bagging is used when our objective is to reduce the variance of a decision tree. Bagging uses a bootstrapping sampling method to provide. Data plays a significant role in the.

A high bias can cause a learning algorithm to skip important information and correlations between the independent variables and the class labels thereby under-fitting the model. The bagging method which Leo Breiman introduced in 1996 consists of three fundamental steps. Bootstrap Aggregation famously knows as bagging is a powerful and simple ensemble method.

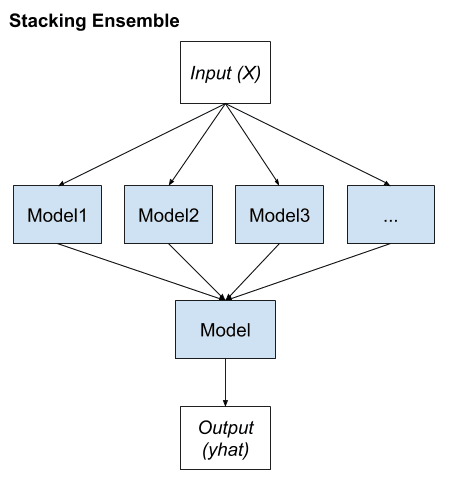

Oct 13 2021 In this blog we will discuss seven major challenges faced by machine learning professionals. Ensemble learning is the same way. Ensemble Classifier Data Mining Geeksforgeeks It is also easy to implement given that it has few key.

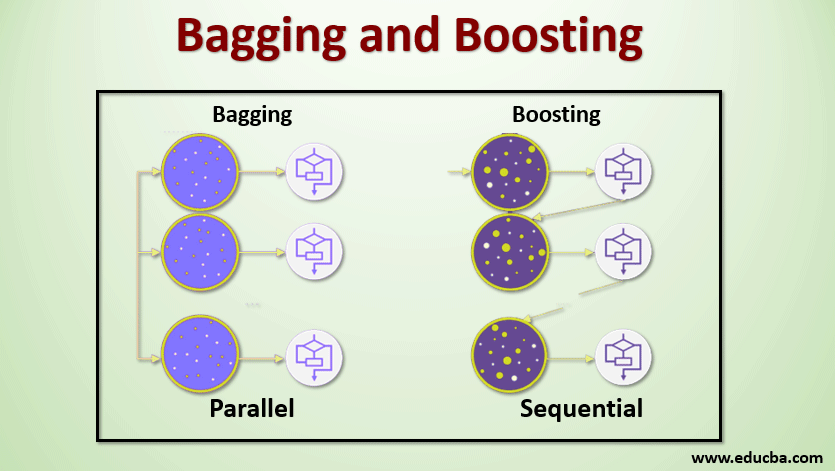

Boosting is an ensemble modeling technique that attempts to build a strong classifier from the number of weak classifiers. From sklearn import model_selection. Lets have a look.

If you are a beginner who wants to understand in detail what is ensemble or if you want to refresh your knowledge about variance and bias the comprehensive article below will. Ensemble learning is a machine learning technique in. Bagging and boosting are two main types of ensemble learning methods.

For each of the t classifiers. Every classifier Mi provides its class prediction. Bagging and boosting are ensemble learning techniques where a set of weak learners used as base models are combined to create a robust learner model that can.

Also the bagged classifier M calculates the votes and allocates the class with the highest votes to X unidentified sample. It is done by building a model by using weak. What are ensemble methods.

Machine Learning Random Forest Algorithm Javatpoint

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Bagging Algorithms In Python Engineering Education Enged Program Section

A Gentle Introduction To Ensemble Learning Algorithms

Bagging And Random Forest Ensemble Algorithms For Machine Learning

Bagging And Boosting Most Used Techniques Of Ensemble Learning

Machine Learning Random Forest Algorithm Javatpoint

What Is Bagging In Machine Learning And How To Perform Bagging

Ensemble Classifier Data Mining Geeksforgeeks

Bias Variance In Machine Learning Concepts Tutorials Bmc Software Blogs

What Is Bagging In Machine Learning And How To Perform Bagging

What Is Bagging In Machine Learning And How To Perform Bagging

Machine Learning Geeksforgeeks

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

How To Develop A Bagging Ensemble With Python